NodeJs code and feature comparison of AWS DynamoDB vs GCP Datastore

This article explains the differences in implementing a NoSQL database integration in Node.Js with either AWS DynamoDB or GCP Datastore.

Earlier this year, I built a Node.Js hackathon project with a colleague to create a Slack app integration. Since we only had a very short time, I quickly built this app with AWS dynamoDb, a tool that I have used a lot before.

Well, the app idea itself didn't win the hackathon, but to avoid this app going to the graveyard of forgotten code, I thought I'd install this app as a useful integration at my current workplace since we use Slack.

At that time, the app was running as a demo on AWS mainly because I forgot to remove my resources (it happens) for a couple of months after the hackathon finished. It was still running in a docker container on AWS ECS fargate and connected to AWS DynamoDb.

However, I had a limitation here. My current company uses Google Cloud Platform (GCP) not AWS. I also didn't want to have this app running on my personal account or have any work data (even if encrypted) stored in my accounts. Therefore, I proceeded to refactor the data layer of the app to use GCP Datastore so I can deploy it on GCP instead.

This article will only focus on the refactoring of the data layer and the code and feature differences between DynamoDB and Datastore that I faced. I hope it becomes useful to anyone thinking of using a similar tool and wanted an implementation comparison in Node.JS between either tool.

Why GCP Datastore?

The first question you might have is why GCP datastore in the first place. GCP offers two types of NoSQL services, Datastore and BigTable. The difference between them is that Datastore is suited for application transactional data with better functionality for queries and high scalability. BigTable on the other hand is more suited for large volumes of data ingestion and is ideal for real-time analytics, and IoT.

Is it Firestore or Datastore?

I got confused when I started reading the documentation here. Eventually, I figured out that the service is confusingly called both Datastore and Firestore. What sometimes confused me is that some of the documentation are also under the firebase domain, like this document which explains the differences between Cloud Firestore and Realtime Database.

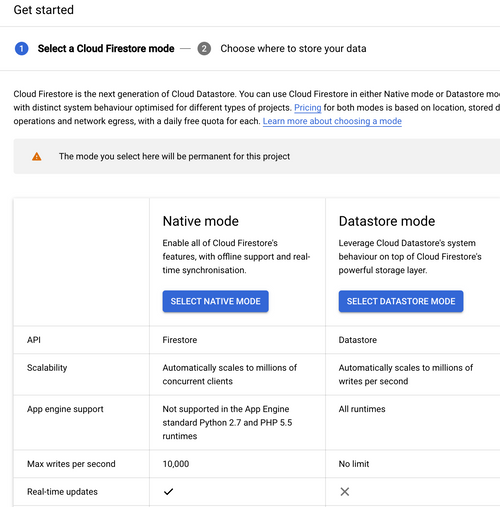

In a nutshell, there used to be Firebase and Datastore. Then, Cloud Firestore came out, which is the newest generation of Firebase database for mobile app development. When you choose Firestore in the GCP console for the first time, it will actually ask you to choose whether you want to use Firestore in Native mode or Datastore mode.

The difference between Native mode and Datastore mode is that the Native mode comes with client libraries and supports real-time sync and offline. It has similar offerings to AWS Amplify on the AWS side. For our purposes, I only want to use Firestore in Datastore mode as I'm using this database on the backend side of a Node.Js app.

Why AWS DynamoDB?

On AWS side, DynamoDB is the best option for a scalable fast NoSQL database. AWS also offers DocumentDB, which is a fast and scalable MongoDb-compatible fully managed document service. For the best comparison between them, I'd recommend this article. But in a gist, DynamoDb is a serverless offering and DocumentDb is a cluster-based service, in which you have to specify the instances type before creating the Amazon DocumentDb Cluster.

Let's start with code implementation differences between AWS DynamoDB and GCP Datastore in a Node.JS app.

Getting started with code

All the code examples come from my Slack app integration. The AWS DynamoDB code is on this branch, and the GCP Datastore is on this other branch.

To start with in a Node.Js app, these are the npm libraries that you need based on your choice of cloud.

- AWS

npm i aws-sdk - GCP

npm i @google-cloud/datastore

Setup of tables and entities

Before we setup the data structure, there are a few keywords that are different between each service.

- In AWS DynamoDb, each model is a Table and each object inside it is an Item.

- In GCP Datastore, each model is a Kind and each object inside it is an Entity.

Setup of tables in AWS DynamoDB

In AWS DynamoDB, before you save any data, you need to create the table and define your KeySchema with a Hash key and a Range key (range is optional). Other namings for Hash key and range key is Partition key and Sort key respectively.

So for example, if we create a table that will be used to store the different messages in a Slack app, we will need the main key to be the WorkspaceId (Organisation Id for each company that installs the app), and the Range will be the id of the message.

import { DynamoDB } from 'aws-sdk';

const dynamoDB = new DynamoDB({

region: 'us-west-2',

});

const isTableExists = async (TableName: string) => {

try {

const tableData = await dynamoDB.describeTable({ TableName }).promise();

return tableData && tableData.Table;

} catch (e) {

return false;

}

};

const MessageTableParam: DynamoDB.CreateTableInput = {

TableName: 'Message',

KeySchema: [

{ AttributeName: 'workspaceId', KeyType: 'HASH' },

{ AttributeName: 'uuid', KeyType: 'RANGE' },

],

BillingMode: 'PAY_PER_REQUEST',

};

const createTablesIfNotExist = async () => {

if (await isTableExists(table.name)) {

console.log('Table exists')

} else {

await dynamoDB.createTable(params).promise();

}

}

createTablesIfNotExist()

.then(() => console.log('DB setup done'))

.catch((e) => console.error('Error occurred while creating the table', e));Normally, you would put this in a script and run it once when your app starts for the first time only.

Setup of Kinds in GCP Datastore

In GCP Datastore, there are no specific steps to create anything prior to saving data.

Saving and Querying data

AWS DynamoDB

In AWS DynamoDB, creating new data entries is straightforward. Message Item contains all your object data but most importantly the Hash (workspaceId) and range (message uuid) which we defined when we created the table.

const AWS = require('aws-sdk');

AWS.config.update({

region: 'us-west-2',

});

const docClient = new AWS.DynamoDB.DocumentClient();

//...

await docClient

.put({ TableName: 'Message', Item: messageItem})

.promise();

Similarly, for querying data, we can just do this:

const message = await docClient

.get({

TableName: 'Message',

Key: { uuid, workspaceId },

})

.promise();

GCP Datastore

Likewise, in GCP datastore, the process is very similar, but we also need to define the keys under the key property. The entity’s key is a way to reference the entity in queries. It includes the entity’s kind and identifier, along with its ancestor path (if available).

const {Datastore} = require('@google-cloud/datastore');

const datastore = new Datastore();

await datastore

.save({

key: datastore.key(['Workspace', workspaceId, 'Message', uuid]),

data: messageItem,

});Similarly for querying data, we can just do this

const messageKey = datastore.key(['Workspace', workspaceId, 'Message', uuid])

const [message] = await datastore.get(messageKey);

Creating indexes for other queries on the same data

Now let's compare the features of indexes and their corresponding queries.

AWS DynamoDB

In AWS DynamoDB, you can define a secondary global index when you create the table in the first place. So, for example, I would like to add a secondary index to my Message table so that I can get all messages in a Slack channel. So, in the new index, my Hash key would be the WorkspaceId, and my Range key would be the channelId.

I can add this to the MessageTableParam variable in the same script above for creating a table. Assuming the table hasn't been created before, otherwise, you will need to do a table update, and the index will take a time to be available.

const MessageTableParam: DynamoDB.CreateTableInput = {

TableName: 'Message',

KeySchema: [

{ AttributeName: 'workspaceId', KeyType: 'HASH' },

{ AttributeName: 'uuid', KeyType: 'RANGE' },

],

AttributeDefinitions: [ // <-- define the attributes and types

{ AttributeName: 'workspaceId', AttributeType: 'S' },

{ AttributeName: 'uuid', AttributeType: 'S' },

{ AttributeName: 'channelId', AttributeType: 'S' },

],

GlobalSecondaryIndexes: [ // <-- define the secondary index

{

IndexName: 'ChannelIndex',

KeySchema: [

{

AttributeName: 'workspaceId',

KeyType: 'HASH',

},

{

AttributeName: 'channelId',

KeyType: 'RANGE',

},

],

Projection: {

ProjectionType: 'ALL',

},

},

],

BillingMode: 'PAY_PER_REQUEST',

};

Now, I can do a query for all of the messages in the channel using this functionality:

async function listAllMessages(workspaceId: string, channelId: string) {

const params = {

TableName: 'Message',

IndexName: 'ChannelIndex',

KeyConditionExpression: 'workspaceId = :w and channelId = :c',

ExpressionAttributeValues: { ':w': workspaceId, ':c': channelId },

};

const data = await docClient.query(params).promise();

const Items = data.Items;

return Items as Message[];

}GCP Datastore

On GCP Datastore, creating an index is actually a bit tricky on the start. First of all, you can't create an index until you have data because there won't be any Kind defined before. After some initial data is created, you can create your index definition in a yaml file.

indexes:

- kind: Message

properties:

- name: workspaceId

- name: channelId

- name: createdAtYou will then need to deploy the indexes to datastore. You must either specifically list index.yaml as an argument to gcloud app deploy e.g. gcloud app deploy index.yaml or run gcloud datastore indexes create to deploy the index configuration file.

After that we can run our query like this:

async function listAllMessages(workspaceId: string, channelId: string) {

const query = datastore.createQuery('Message')

.filter('workspaceId', '=', workspaceId)

.filter('channelId', '=', channelId).order('createdAt');

const [messages] = await datastore.runQuery(query);

return messages as Message[];

}Local development

One of the main criteria to choose is the ease of development locally. AWS dynamoDb came as a clear winner here for me due to the great support available to run a local DynamoDB and connect the NoSQL workbench for you to view the data visually. You can even enable time to live on the local database. The instructions are all listed in the readme file of the repository.

For GCP datastore, you can run and connect a local datastore emulator, however, there was no way to easily view your data and it lacked a bit compared to my local development experience with AWS DynamoDB.

Time to live (ttl)

Both databases (AWS and GCP) support time to live. It was a new feature added to Datastore just earlier this year in 2022. TTL is very useful to auto-delete data based on a timestamp value saved in one of the item/entity properties.

Other features

It is difficult to do a full feature comparison between both databases, but let's touch on the main features that I have come across:

- Import and export of data: Both support import and export of data (AWS DynamoDB to S3, and GCP Datastore to GCS).

- Stream and trigger points of data. I didn't find this feature with Datastore, but AWS DynamoDB has a feature called DynamoDb stream which you can enable on a table to allow further processing of data saved. A stream record contains information about a data modification to a single item in a DynamoDB table. You can configure the stream so that a serverless lambda function is triggered when a new record is created and you can do further data processing.

- Multi-region tables: this is clearly supported on AWS DynamoDB with global tables as a fully managed service. You can enable global tables per table on the UI. However, for GCP Datastore, you can only specify whether your table runs in a single region or as a multi-region table when you start the project and setup the datastore for the first time.

- Backup: One of the nice things about AWS DynamoDB is point-in-time recovery (if enabled) which allows you to easily restore your database to any point in the last 35 days to revert accidental writes. You can also create manual backups on specific intervals. In GCP datastore, there are no clear options for backups (as its a managed service) except for the manual export.

Other important factors: price and performance.

This article won't be sufficient to help someone make a final decision on whether to use GCP or AWS as usually, you would have other considerations for performance and pricing. At the moment, I haven't had the chance to do a deeper comparison on these points, therefore, I'd like to point you to other useful articles that talk about these points in more detail for now.

- NoSQL databases comparison: Cosmos DB vs DynamoDB vs Cloud Datastore and Bigtable

- NoSQL Cloud Service Benchmarking: DynamoDB vs. Datastore - a bit old though so I'd be wary of the results here as a lot has changed over the last 5 years.